Albedo to EARS, Pt. 4 - Denoising and the Spatial Cache

Happy Tuesday!

This week I had the chance to play around with Intel’s Open Image Denoise as a post-process to the main rendering loop. I also took some time to track down author source for ADRRS and EARS to start towards understanding how much massaging I may need to do to drop it into this renderer.

As always, some new results before jumping into things:

Pretty incredible for such a sparsely sampled input!

Project proposal: project-proposal.pdf

First post in the series: Directed Research at USC

GitHub repository: roblesch/roulette

Open Image Denoise

Before jumping into OIDN it’s useful to recall its relevance.

Intel’s Open Image Denoise is used in the EARS algorithm for approximating ground truth images. Recall the formulation of the splitting factor:

\[n_i(x) = \gamma_S(n_{i-1}(x)) = \sqrt{\frac{V_y(x)}{\mathbb{V}[\langle I;n_{i-1}\rangle]}\frac{\mathbb{E}[c(\langle I;n_{i-1}\rangle)]}{\mathbb{E}[c(\langle H(x)\rangle)\mid x]}}\]The quantity \(\frac{V_y(x)}{\mathbb{V}[\langle I;n_{i-1}\rangle]}\) estimates the local variance for some sample \(V_y(x)\) in relation to the “ground-truth” variance \(\mathbb{V}[\langle I;n_{i-1}\rangle]\). Rather than provide a final ground-truth image as input, EARS uses OIDN to iteratively approximate ground truth when evaluating local variance.

What is OIDN?

The OIDN repository has an excellent overview on the project with snippets and samples. Check it out if you’re curious!

Setting up OIDN

The easiest way to get OIDN and its dependencies is by installing the oneAPI Rendering Toolkit. It can also be built from source by including the repository and pulling the training weights from git lfs, but the installer meets all my needs for now.

CMake needs to know where to find OIDN. The oneAPI OIDN Sample Project has a snippet that solves this -

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

set(ONEAPI_ROOT "")

if(DEFINED ENV{ONEAPI_ROOT})

set(ONEAPI_ROOT "$ENV{ONEAPI_ROOT}")

message(STATUS "ONEAPI_ROOT FROM ENVIRONMENT: ${ONEAPI_ROOT}")

else()

if(WIN32)

set(ONEAPI_ROOT "C:/Program Files (x86)/Intel/oneAPI")

else()

set(ONEAPI_ROOT /opt/intel/oneapi)

endif()

message(STATUS "ONEAPI_ROOT DEFAULT: ${ONEAPI_ROOT}")

endif(DEFINED ENV{ONEAPI_ROOT})

set(OIDN_ROOT ${ONEAPI_ROOT}/oidn/latest)

set(OIDN_INCLUDE_DIR ${OIDN_ROOT}/include)

set(OIDN_LIB_DIR ${OIDN_ROOT}/lib)

find_package(OpenImageDenoise REQUIRED PATHS ${ONEAPI_ROOT})

find_package(TBB REQUIRED PATHS ${ONEAPI_ROOT})

include_directories(${OIDN_INCLUDE_DIR} ${OIDN_ROOT} ${OIDN_GSG_SOURCE_DIR}/src)

link_directories(${OIDN_ROOT}/lib)

target_link_libraries(${APP_NAME} OpenImageDenoise)

I also needed to bundle the dll’s for OIDN and tbb with the executable on Windows. This was simple enough with a couple extra snippets. Where OIDN is resolved, raise the paths to OIDN and tbb binaries:

1

2

3

4

if (WIN32)

set(OIDN_BIN_DIR ${OIDN_ROOT}/bin PARENT_SCOPE)

set(TBB_BIN_DIR ${ONEAPI_ROOT}/tbb/latest/redist/intel64/vc14 PARENT_SCOPE)

endif()

Then copy the dlls after building the main executable:

1

2

3

4

5

6

7

8

add_custom_command(TARGET renderer POST_BUILD

COMMAND ${CMAKE_COMMAND} -E copy_if_different

"${OIDN_BIN_DIR}/OpenImageDenoise.dll"

$<TARGET_FILE_DIR:renderer>)

add_custom_command(TARGET renderer POST_BUILD

COMMAND ${CMAKE_COMMAND} -E copy_if_different

"${TBB_BIN_DIR}/tbb12.dll"

$<TARGET_FILE_DIR:renderer>)

On MacOS I had to add the rpath to TBB libs:

1

2

3

4

add_custom_command(TARGET renderer POST_BUILD

COMMAND ${CMAKE_INSTALL_NAME_TOOL} -add_rpath

"${TBB_LIB_DIR}"

$<TARGET_FILE:renderer>)

I was curious if oneAPI would work on Apple Silicon, but it seemed to work fine as long

as I told CMake to target x86.

Using OIDN

Once OIDN is available, integrating it into the renderer is straightforward. The OIDN repository has a

snippet for filtering - all I need

to do is provide it with buffers holding the albedo, normals and estimated color of

an image, and it will write a denoised version to an output buffer. Since FrameBuffer stores pixels

as a contiguous buffer of floats, I could pass these buffers directly to OIDN and didn’t need to write

any reshaping on the input or output. Convenient!

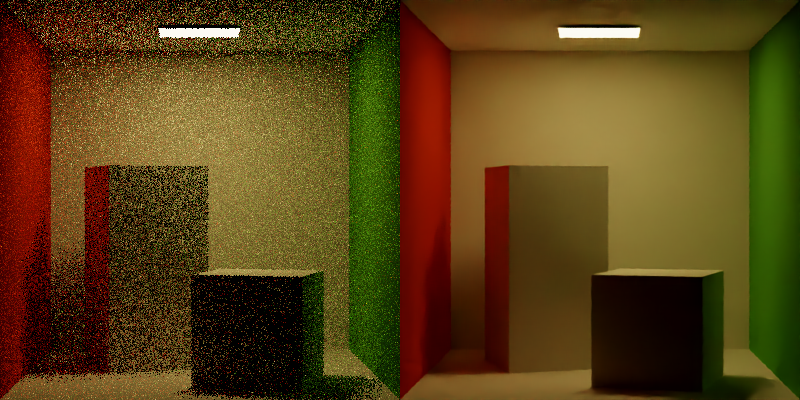

The easiest way to achieve this is by taking advantage of the debugging functionality I already have in place

to trace albedo and normals and throw those into a buffer. In the future I’ll extend the FrameBuffer with extra

buffers for albedo and normals, and the path tracing loop with caching those values. For now, tracing them separately

is enough to get some results:

Very cool! Pretty easy to set up and integrate to the render loop, and I’m surprised how easy it was to get it working on both my desktop and my ARM Macbook.

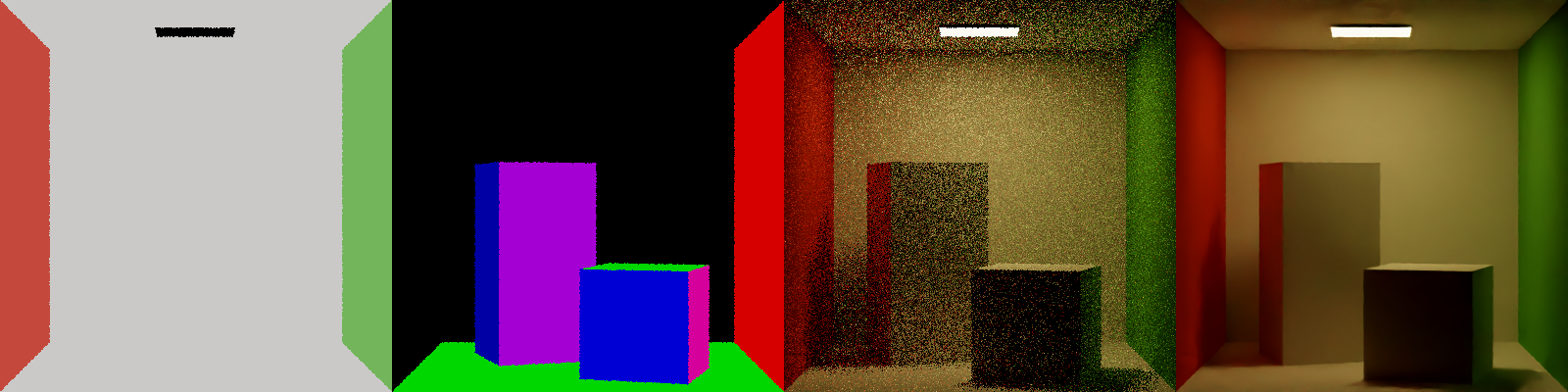

Exploring OIDN

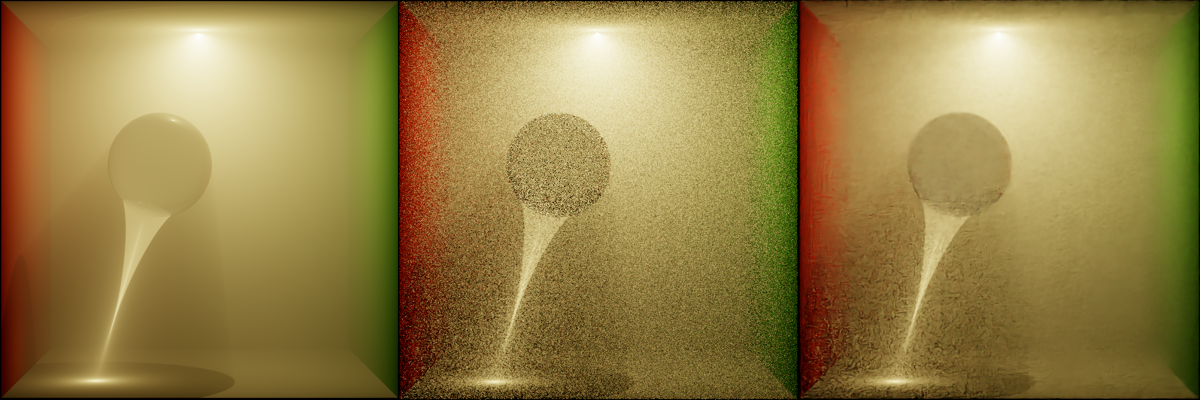

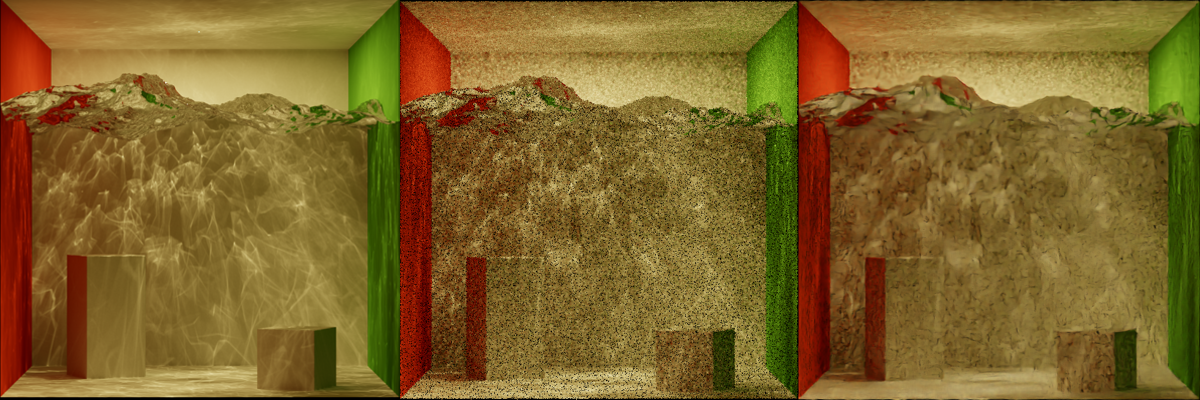

OIDN produces incredible results, blazingly fast too. Is rendering solved? Why am I even working on this project anymore? Although an incredible powerful tool, OIDN is not a not a miracle solution to the rendering problem; as a denoiser it still relies on quality inputs. I was interested in seeing what OIDN could do with more complex phenomena like non-atmospheric participating media and caustics. I don’t have nearly enough time to add these materials to my renderer at this exact moment, so I instead slapped OIDN to Tungsten to test OIDN on Benedikt’s volumetric caustic and water caustic scenes.

At only 1spp this isn’t the fairest test, but I think it emphasizes the point; without enough information on the input OIDN cannot deduce a physically plausibly denoised output. Visible above, regions with complex caustic phenomena come out of denoising with additional artifacting.

Spatial Caches

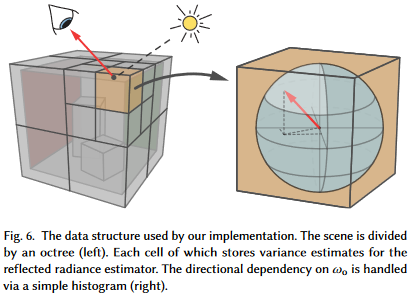

With OIDN squared away, the other key component of ADRRS and EARS is the Spatial Cache.

Fortunately, EARS’ author Alexander Rath has some source available on his GitHub that provides reference implementations for both ADRRS and EARS.

Peeking into the source, the octtree.h header seems

to provide everything needed for both ADRRS and EARS. Even better, it only depends on std::array and std::vector. Awesome!

The one drawback is that Mitsuba does not seem to be a first-class citizen on Windows these days. I am able to build it fine on my Mac, but

I’m still wrestling with the build on my desktop. Tungsten on the other hand can only be built on my desktop, due to its dependencies on the

SSE3 capabilities of Intel chips. Worst case, I can split work on different projects across my two devices. Ideally I’d like to get Mitsuba

building on my desktop without resorting to a VM or container.

But these are problems for the future. Tune in next time where I’ll share progress on moving my implementation towards integration of ADRRS and EARS. Thanks for reading!