Albedo to EARS, Pt. 5 - The EARS Tracer

Happy National Cereal Day.

This week I made steps towards enabling ADRRS and EARS. In this post I’ll talk through how I navigated transplanting author Alexander Rath’s implementation into the renderer, including some necessary changes to the implementation of the tracing algorithm. This post focuses on the steps to get just before ADRRS and EARS, and so is a bit lighter on visible developments.

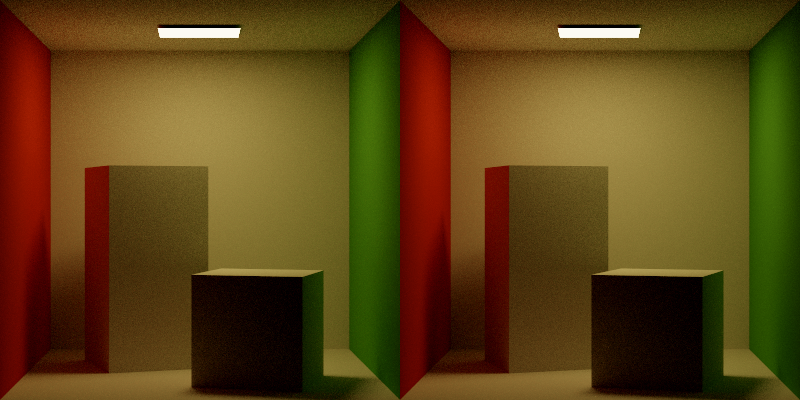

As usual, some results -

Two images that look about the same is not very exciting, but in this case that’s the best outcome. The left render is Tungsten’s formulation of forward recursive path tracing, and the right is EARS’ formulation.

Project proposal: project-proposal.pdf

First post in the series: Directed Research at USC

GitHub repository: roblesch/roulette

Code Additions

- Added EARS Integrator, EARS Tracer

- Added Octtree

Next Steps

- Map point to octtree bin

- Update bins with variance estimates

Towards EARS

My life is made infinitely easier by the Author’s open-source implementation of ADRRS & EARS. The implementation is provided as a recursive forward path integrator for Mitsuba. Rather than find where ADRR and EARS slot in to Tungsten’s render algorithm, I implemented an alternate tracer and integrator on the same interface.

Reformulating Tracing

EARS and Tungsten both implement recursive forward path tracing, and they both use a standard recursive formulation for forward Monte Carlo Light Transport with Multiple Importance:

1

2

3

4

5

Radiance(scene, ray)

it = scene->intersect(ray)

Lo += SampleDirectLighting(it)

Lo += SampleBSDF(it)

Lo += SampleIndirectLighting(it) // Recursive step

Where they differ is in their termination conditions and how they evaluate shadow rays. Tungsten mixes shadow rays and indirect illumination, and EARS evaluates shadow rays as part of direct lighting. If you’re interested, my implementation of Tungsten’s tracing is available here, and my implementation of EARS’s is here.

Roulette and Splitting

With the base implementation working, the next steps to performing ADRRS and EARS are the addition of the spatial cache, filling the spatial cache with the right values, and using those values to compute the splitting factor that determines if a path is terminated (RR) or split (S).

Augmenting tracing with RRS looks something like this -

1

2

3

4

5

6

7

8

9

10

11

12

Radiance(scene, ray)

it = scene->intersection(ray)

// 0 : termination, >1 : splitting

rrs = RRSMethod.evaluate(cacheNode, it)

// find the bin in the octtree that contains `it`

node = cacheLookup(its)

for n = 1 ... rrs do

Lo += SampleDirectLighting(it)

Lo += SampleBSDF(it)

Lo += SampleInderectLighting(it)

cost += tracingCost

return cost, Lo

Additionally the integrator is augmented with -

1

2

3

4

5

6

7

8

9

Render(scene, buffer)

for spp = 1 ... n do

initializeStatistics(cost, variance)

for px : buffer

ray = cameraRay(px)

Lr, cost, variance = Radiance(scene, ray)

I = denoise(buffer)

for block B : cache

updateVariance(cost, variance)

So for each pass over the image, we perform denoising and use this estimate of the expected image to compute a local variance. The variance and cost are processed in the cache (octtree), and used in each path to produce an RRS value which is 0 if the path should be terminated, and >= 1 if the path should be continued or split.

Here is the current implementation of the tracer - earstracer.cpp

And the current implementation of the integrator - integrator.cpp

Next Steps

The remaining work is to map intersection points in the scene to the unit cube of the octtree, and update values in the cache as estimates are computed. Once this is completed, both ADRRS and EARS are enabled and I can determine what augmentations to the test scene I can make to demonstrate the strengths of these techniques.