Albedo to EARS, Pt. 6 - Statistical Accumulators & The EARS Integrator

Hello again! It’s been awhile - this post was pushed back a week to give me some time to relax over Spring Break. I took the chance to visit Death Valley before the weather turns ugly. Death Valley’s Badwater Basin is the lowest point in North America and is only 84.6 miles from the highest point in the (contiguous) United States - Mount Whitney.

Anyway, back to EARS. A quick recap on progress so far - parts 1 - 3 implemented the base rendering framework that would be extended with ADRRS and EARS. Part 4 introduced Intel’s Open Image Denoise and the octtree that caches the directional variance histograms used to select RRS values. In part 5, I implemented the EARS formulation of forward path tracing used to traverse paths, perform RRS and produce per-pixel estimates. In this post, I’ll discuss how I implemented the statistical accumulators that store the results of each iteration, and how all of these pieces are brought together in the EARS integrator.

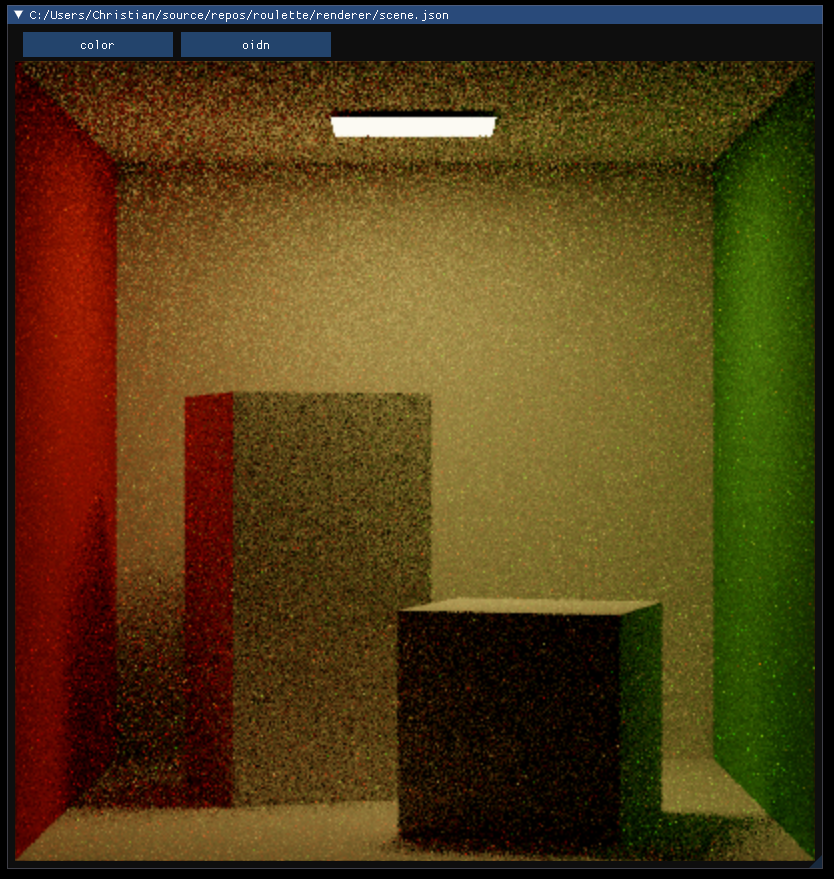

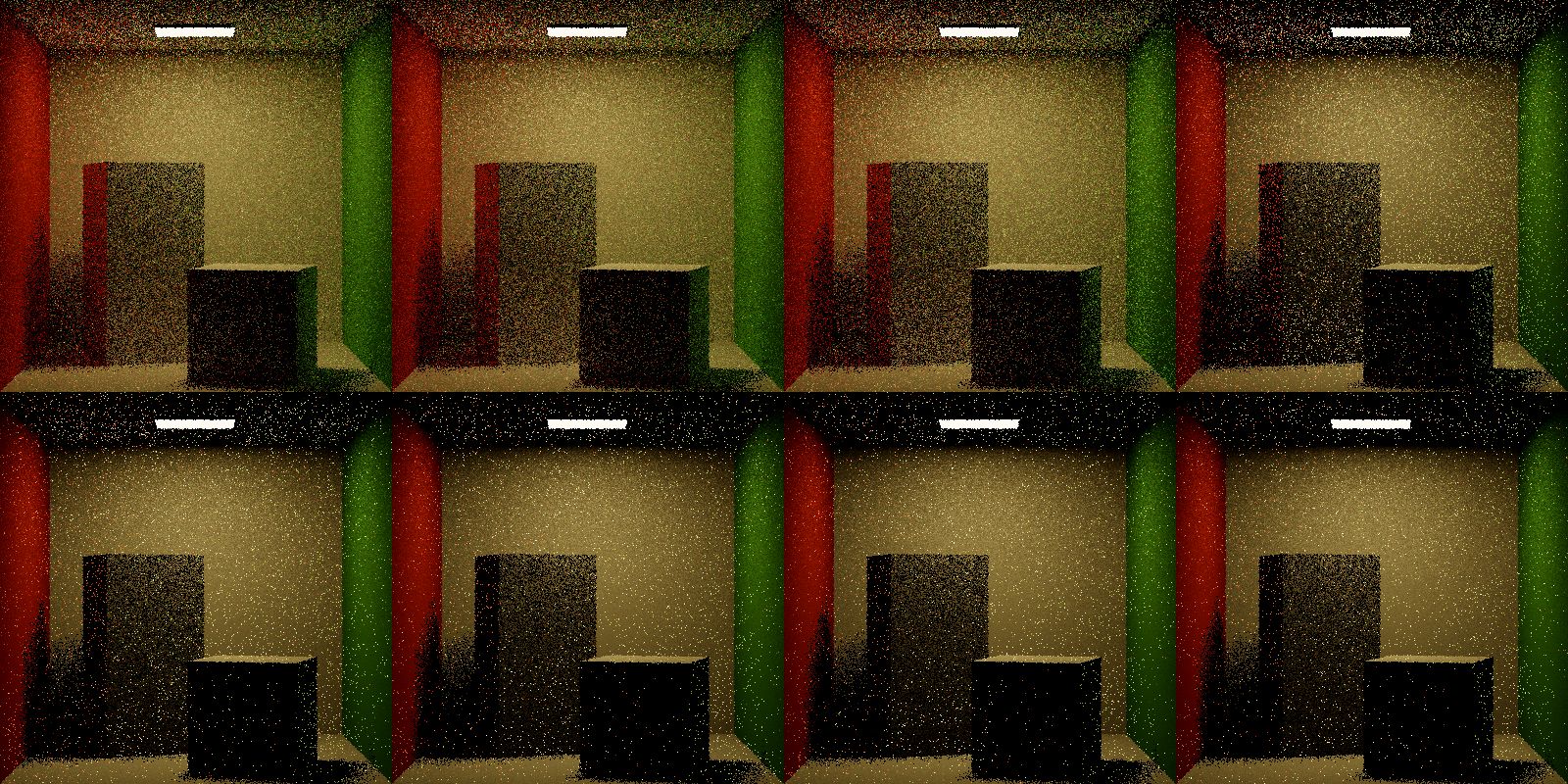

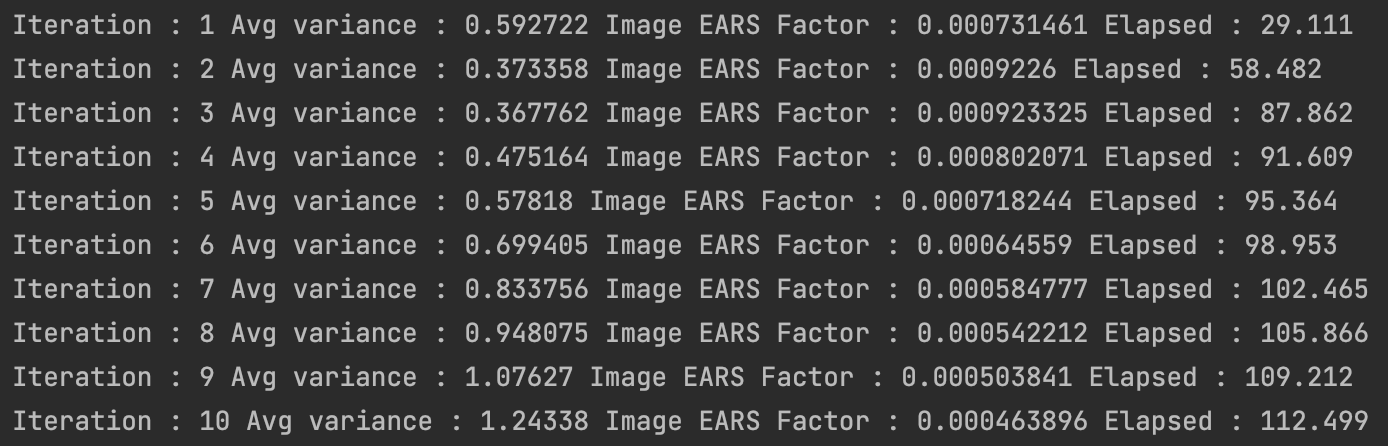

As usual, some results -

Above are the results of the first 8 iterations of images generated by training RRS parameters over the scene. Clearly something isn’t working as expected - the average variance over the image is diverging after each iteration rather than converging. I’ll explain my thoughts on likely causes and my plan to get these fixed later in the post.

Project proposal: project-proposal.pdf

First post in the series: Directed Research at USC

GitHub repository: roblesch/roulette

Statistical Accumulators

There are two statistical accumulators that contribute to iteration of the EARS integrator. The first is the spatial cache (octtree) discussed and implemented in previous posts, which stores the estimated reflected radiance \(L_r\) and its estimated cost of calculation. The cache bins these estimates for each bounce along the path so that future paths can check the cache to find their nearest estimate and use this estimate as part of the calculation whether to perform russian roulette or split.

Original implementation: irath96/ears/octtree.h/Octtree

The second accumulator is the image statistics. The image statistics accumulate the per-pixel relative MSE against the image estimate, produced by denoising. The image statistics performs outlier rejection to prevent especially noisy pixels from overly biasing the estimated variance over the image. These statistics are also used in determining an appropriate RRS value along each path.

Original implementation: irath96/ears/recursive_path.cpp/ImageStatistics

Integrator

With the spatial cache, the tracer, denoising, and image statistics, all the pieces are in place to begin using the EARS algorithm to generate RRS values. The piece that brings all of these steps together is the integrator. The integrator performs multiple iterations over the image, trains the cache, stores variance estimates in the image statistics, performs denoising to produce an image estimate, and merges estimates by variance.

It looks something like this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

EARSIntegrator::render(Scene scene, FrameBuffer frame):

// render the albedo and normals for denoising

albedo, normals = renderDenoisingAuxillaries(scene)

spatialCache = initializeSpatialCache()

imageStatistics = initializeImageStatistics()

finalImage = initializeFinalImage()

foreach iteration in timeBudget:

rrsMethod = iteration < 3 ? Classic() : EARS()

iterationImg.clear()

foreach spp in iterationTimeBudget:

foreach pixel in image:

iterationImg.add(earsTracer.trace(pixel))

// reject variance outliers

imageStatistics.applyOutlierRejection()

// train the cache

spatialCache.rebuild()

// merge images by variance

finalImage.add(iterationImg, spp, imageStatistics.avgVariance())

// produce a denoised estimate

earsTracer.denoised = OIDN::denoise(iterationImg, albedo, normals)

frame.result = finalImage.develop()

Original implementation: irath96/ears/recursive_path.cpp/render(), renderTime(), renderBlock()

Issues

Something isn’t working out. There are a handful of sources of error, each of which are quite hard to pin down without solid references to debug against. It could be that the cache is not properly updating or that the values are improperly weighted. Or, the image statistics could have similar issues.

There is a difference in iteration time - my renderer is single-threaded on the CPU and Mitsuba is GPU-accelerated, so the render times will be vastly quicker. I experimented with some Vulkan code samples for GPU-accelerated PBR, and the accelerated tracer can perform a couple hundred spps in the time that my renderer can produce one. I can work around this by forcing a number of iterations and SPP, which I might derive from some of the supplemental charts provided with the paper.

Additionally, in the authors implementation image statistics perform outlier rejection per-block rather than per-image-iteration, meaning that more outliers are rejected more frequently.

Next Time

I have a lot of painful debugging ahead of me - but the end is in sight. With all the pieces in place, all that’s left to do is tune them up. Check in next time to see if I was able to get things working. As always, thank you for reading.

Footnotes

I have big dreams of wrapping this project in an interactive GPU-accelerated hybrid renderer with Vulkan. For now, I just wanted to mess around with ImGui, so I wrapped the outputs in GLFW and drew it to a texture with some buttons.